– By Biswajit Biswas

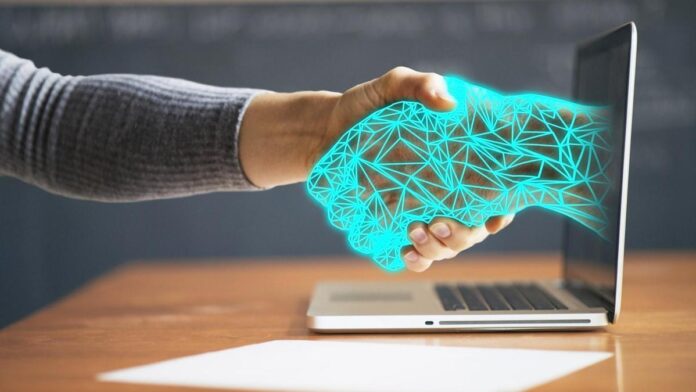

Artificial Intelligence (AI) is rapidly transforming society, revolutionizing industries, and redefining human interactions. However, its impact on human rights brings both opportunities and challenges. At this critical juncture, it is imperative to ensure that AI development and deployment adhere to robust ethical principles, respecting human dignity and inclusivity while addressing unintended consequences.

Opportunities and Challenges of AI in Human Rights

AI has the potential to advance human rights by fostering inclusivity, accessibility, and justice. For instance, AI-driven assistive technologies enable visually impaired individuals to navigate the world using real-time audio inputs generated by wearable cameras. Similarly, AI applications for individuals with hearing impairments or mobility challenges are revolutionizing access to essential services. These innovations empower marginalized communities, ensuring their full participation in societal activities. Moreover, AI is bridging the gap in legal access. Tools such as AI-enabled virtual legal assistants provide first-level legal advice, helping people understand their rights and the legal processes they can undertake. This is particularly beneficial for those hesitant to approach traditional legal channels due to stigma or financial constraints.

Also ReadRelief for Vodafone Idea, DoT waives bank guarantee

However, alongside these opportunities lie significant challenges. One pressing issue is bias in AI systems, often stemming from unbalanced training data. Such biases can perpetuate inequalities, particularly against underrepresented groups. For instance, facial recognition systems have been criticized for disproportionately misidentifying individuals from minority communities, leading to potential rights violations. Additionally, the lack of transparency in AI decision-making processes poses risks of misuse, undermining accountability. To ensure AI systems uphold human rights, ethical principles must be integral to their design and deployment.

Ethical Principles in AI Development and Decision-Making

Developers must actively mitigate biases in training data and algorithms. Techniques such as synthetic data generation can help address imbalances in datasets, ensuring equitable outcomes for all demographics. Equally important is the need for explainability and transparency. AI systems must provide clear, understandable reasoning behind their decisions. Explainable AI enables stakeholders to trace the logic of decisions, fostering trust and enabling corrective measures when errors occur. Governments, businesses, and AI developers share a collective responsibility to establish governance frameworks. These frameworks should regulate AI use, ensure compliance with ethical norms, and hold developers accountable for adverse impacts. Concepts such as responsible AI and post-quantum cryptography are emerging to address security and future risks.

» Read More